A07 - Project: Bicycle Sharing#

Kaplan, Daniel & Matthew Beckman. (2021). Data Computing. 2nd Ed. Home.

Revised

19 Jun 2023

Programming Environment#

library(dcData)

library(leaflet)

library(lubridate)

library(tidyverse)

sessionInfo()

Attaching package: ‘lubridate’

The following objects are masked from ‘package:base’:

date, intersect, setdiff, union

── Attaching core tidyverse packages ───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────── tidyverse 2.0.0 ──

✔ dplyr 1.1.2 ✔ readr 2.1.4

✔ forcats 1.0.0 ✔ stringr 1.5.0

✔ ggplot2 3.4.3 ✔ tibble 3.2.1

✔ purrr 1.0.2 ✔ tidyr 1.3.0

── Conflicts ─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────── tidyverse_conflicts() ──

✖ dplyr::filter() masks stats::filter()

✖ dplyr::lag() masks stats::lag()

ℹ Use the conflicted package (<http://conflicted.r-lib.org/>) to force all conflicts to become errors

R version 4.3.0 (2023-04-21)

Platform: aarch64-apple-darwin20 (64-bit)

Running under: macOS 14.4.1

Matrix products: default

BLAS: /Library/Frameworks/R.framework/Versions/4.3-arm64/Resources/lib/libRblas.0.dylib

LAPACK: /Library/Frameworks/R.framework/Versions/4.3-arm64/Resources/lib/libRlapack.dylib; LAPACK version 3.11.0

locale:

[1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

time zone: America/New_York

tzcode source: internal

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] forcats_1.0.0 stringr_1.5.0 dplyr_1.1.2 purrr_1.0.2

[5] readr_2.1.4 tidyr_1.3.0 tibble_3.2.1 ggplot2_3.4.3

[9] tidyverse_2.0.0 lubridate_1.9.2 leaflet_2.2.0 dcData_0.1.0

loaded via a namespace (and not attached):

[1] gtable_0.3.3 jsonlite_1.8.5 compiler_4.3.0 crayon_1.5.2

[5] tidyselect_1.2.0 IRdisplay_1.1 scales_1.2.1 uuid_1.1-0

[9] fastmap_1.1.1 IRkernel_1.3.2 R6_2.5.1 generics_0.1.3

[13] htmlwidgets_1.6.2 munsell_0.5.0 tzdb_0.4.0 pillar_1.9.0

[17] rlang_1.1.1 utf8_1.2.3 stringi_1.7.12 repr_1.1.6

[21] timechange_0.2.0 cli_3.6.1 withr_2.5.0 magrittr_2.0.3

[25] crosstalk_1.2.0 digest_0.6.31 grid_4.3.0 hms_1.1.3

[29] base64enc_0.1-3 pbdZMQ_0.3-9 lifecycle_1.0.3 vctrs_0.6.3

[33] evaluate_0.21 glue_1.6.2 fansi_1.0.4 colorspace_2.1-0

[37] tools_4.3.0 pkgconfig_2.0.3 htmltools_0.5.5

# information about the location of each of the stations in the system

station_url <- 'https://mdbeckman.github.io/dcSupplement/data/DC-Stations.csv'

Stations <- readr::read_csv(station_url)

# the rental history from 2014 Q4

# the `Trips` data table is a random subset of 10,000 trips from the full quarterly data

# the full data set of more than 600,000 trips can be accessed by removing `-Small` from the url

trip_url <- 'https://mdbeckman.github.io/dcSupplement/data/Trips-History-Data-2014-Q4-Small.rds'

Trips <- readRDS(gzcon(url(trip_url)))

source('https://mdbeckman.github.io/dcSupplement/R/haversine.R')

Rows: 347 Columns: 5

── Column specification ─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Delimiter: ","

chr (1): name

dbl (4): lat, long, nbBikes, nbEmptyDocks

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.

# Stations <-

# Stations %>%

# select(lat, long)

# Stations %>%

# head()

# Trips <-

# Trips %>%

# select(sstation, estation, client, sdate, edate)

# Trips %>%

# head()

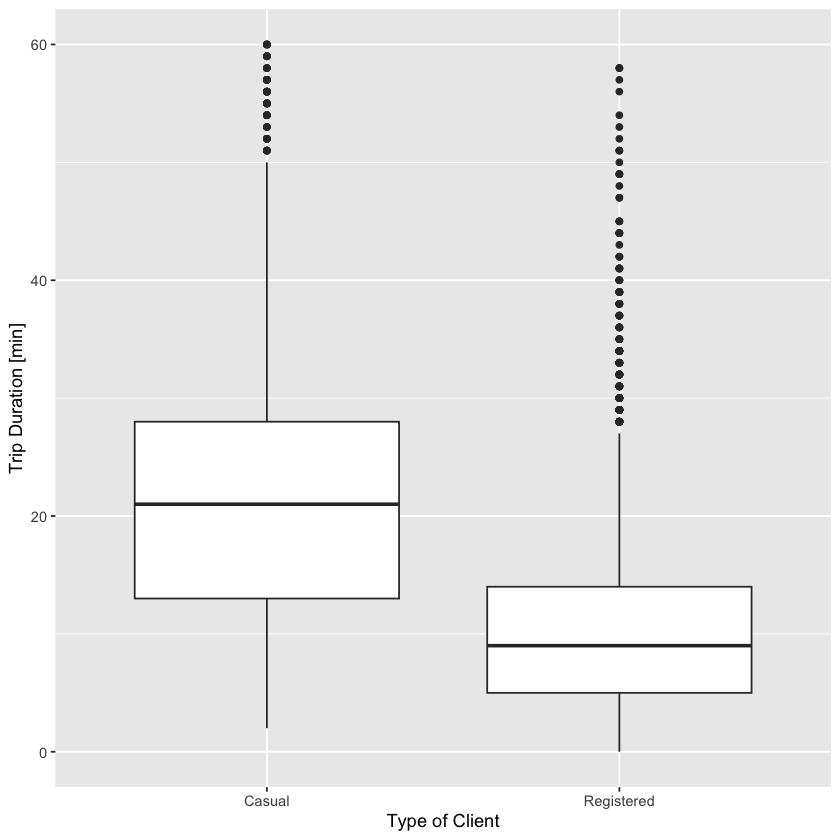

How long?#

Make a box-and-whisker plot showing the distribution of the duration of rental events broken down by client. The duration of the rental can be calculated as as.numeric(edate - sdate). The units will be in either hours, minutes, or seconds. It should not be much trouble for you to figure out which one. When you make your plot, you will likely find that the axis range is being set by a few outliers. These may be bikes that were forgotten. Arrange your scale to ignore these outliers, or filter them out.

When are bikes used?#

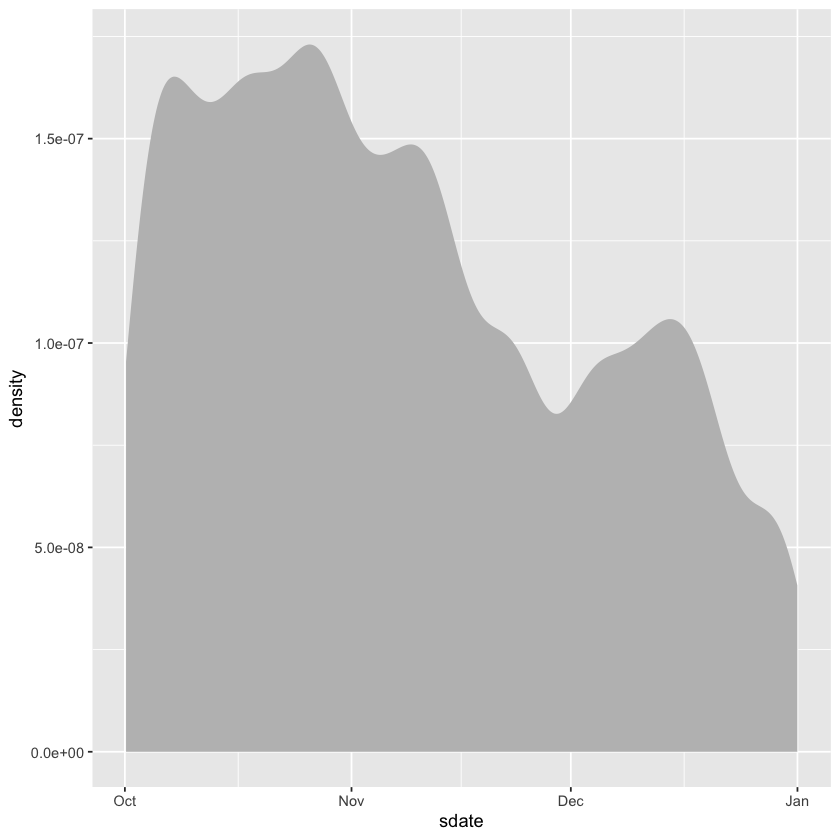

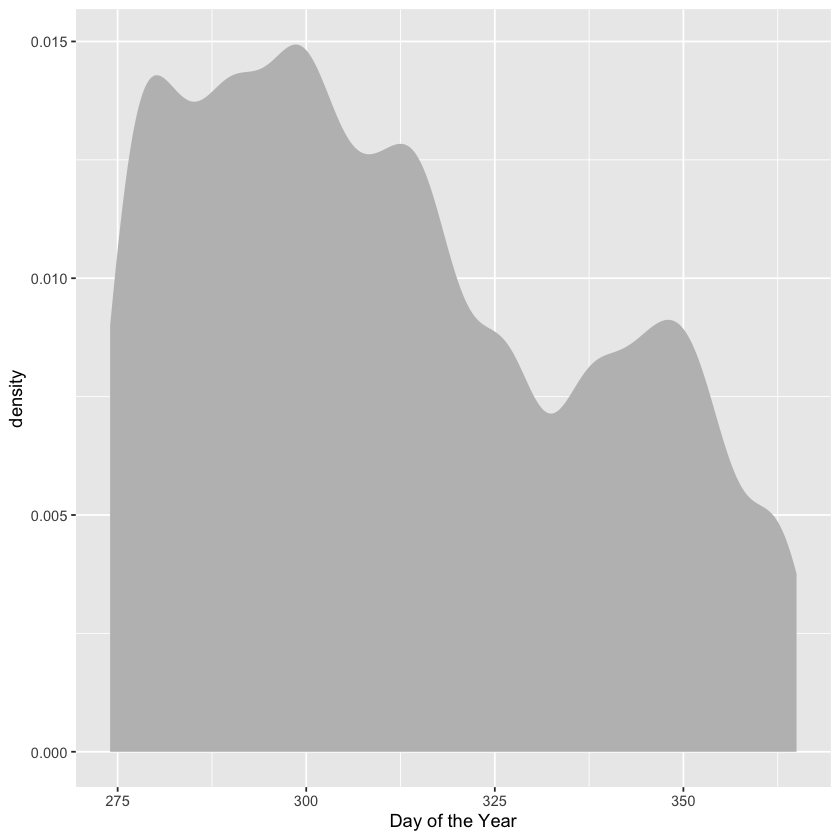

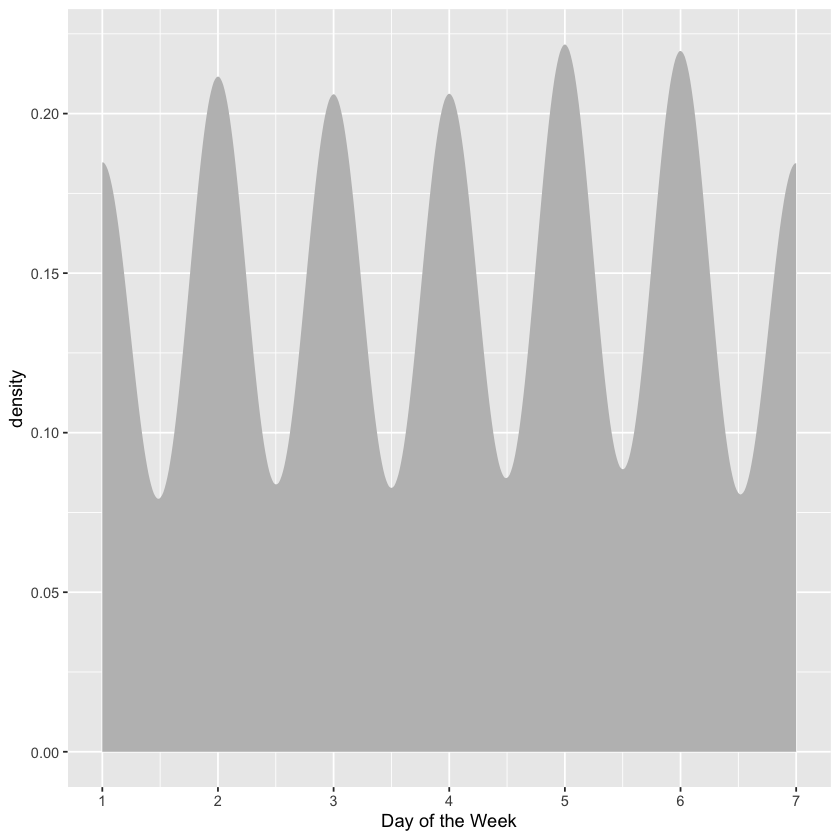

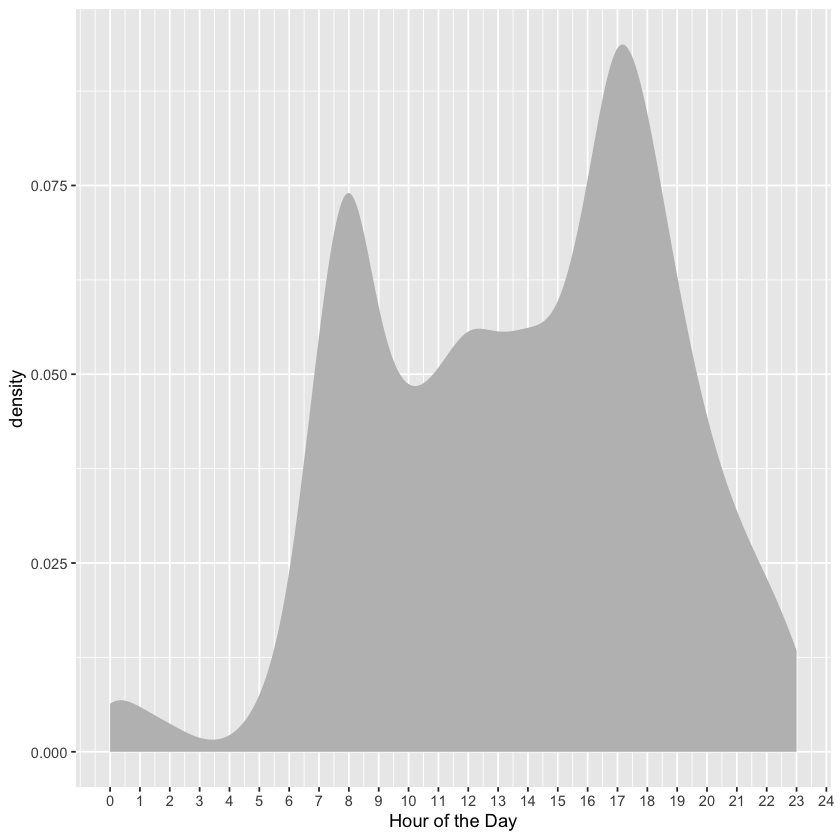

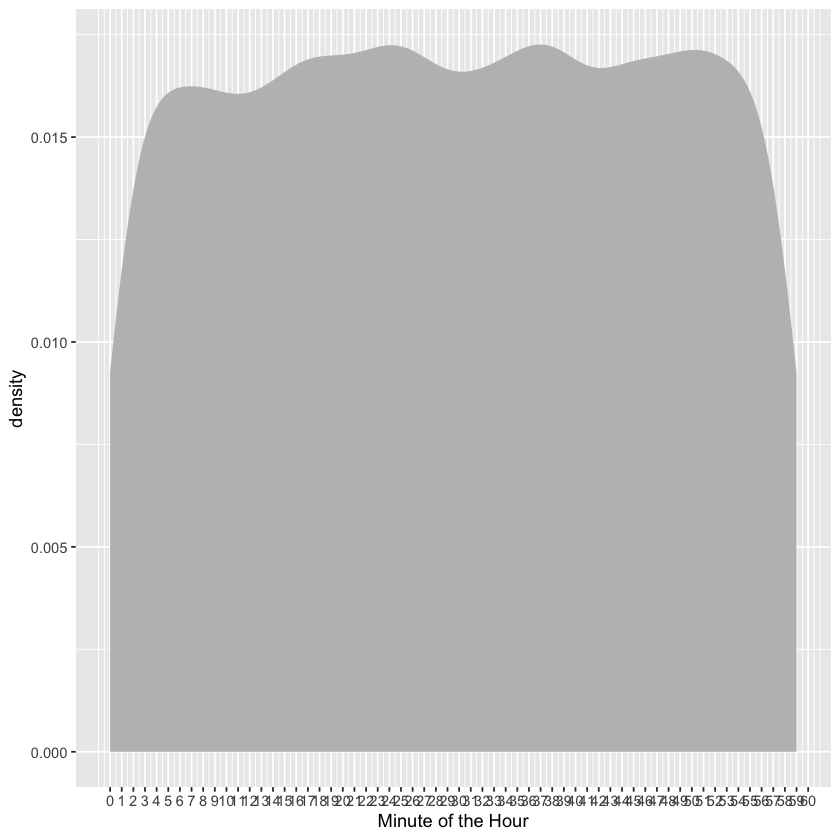

[1] Variable Trips$sdate indicates the datetime that the bicycle was checked out of the station. Make histograms or density plots of each of these discrete components. Explain what each plot is showing about how bikes are checked out. For example, the distribution by hour of the day shows that few bikes are checked out before 5am, and that there are busy times around rush hour: 8am and 5pm.

Day of the year: bike rentals are highest in October; decline in November; and pick up again in the first half of December.

Day of the week: bike rentals are fairly stable over the course of the week; Thursday and Friday see the most rentals, and Saturday and Sunday see the least. Monday sees a little bit more than Tuesday and Wednesday.

Hour of the day: bike rentals are low before 5:00am; spike up during morning rush hour; decrease a bit throughout the day; spike back up during evening rush hour; and rapidly decline to early morning levels into the night.

Minute of the hour: I’m not sure there’s much of a trend along this scale, other than that the numbers of rentals oscillate a bit (i.e., it is not fixed).

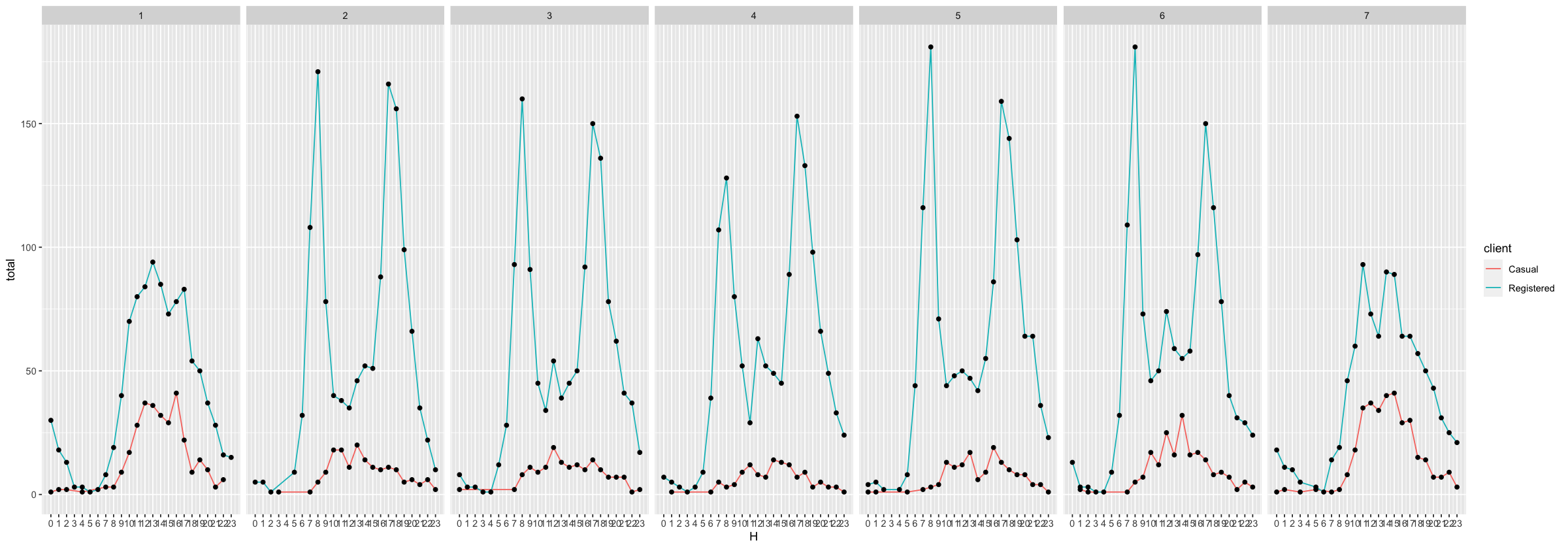

[2] A similar sort of display of events per hour can be accomplished by calculating and displaying each hour’s count. The graphic shows a lot of variation of bike use over the course of the day. Now consider two additional variables: the day of the week and the client type. Group the bike rentals by three variables: hour of the day, day of the week, and client type. Find the total number of events in each grouping and plot this count versus hour. Use the group aesthetic to represent one of the other variables and faceting to represent the other. (Hint: utilize facet_wrap() in the plotting commands.) Make the same sort of display of how bike rental vary of hour, day of the week, and client type, but use geom_density() rather than grouping and counting. Compare the two displays–one of discrete counts and one of density–and describe any major differences.

Generally, registered clients rent more bikes than casual clients across hour of the day and across day of the week. On an hourly basis, however, casual client rentals usually reach a maximum in the afternoon, when registered client rentals dip in between morning and evening rush hour. Also, casual client rentals peak over the weekend, when registered client rentals drop the most.

Trips$sdate[1]

lubridate::yday(Trips$sdate)[1] # day of the year from 1 to 365

lubridate::wday(Trips$sdate)[1] # day of the week from Sunday to Saturday

lubridate::hour(Trips$sdate)[1] # hour of the day

lubridate::minute(Trips$sdate)[1] # minute of the hour

[1] "2014-10-15 08:58:00 UTC"

Trips %>%

ggplot(aes(x = lubridate::yday(sdate))) +

geom_density(fill = 'gray', color = NA) +

xlab('Day of the Year')

Trips %>%

ggplot(aes(x = lubridate::wday(sdate))) +

geom_density(fill = 'gray', color = NA) +

xlab('Day of the Week') +

scale_x_continuous(breaks = 0:8)

Trips %>%

ggplot(aes(x = lubridate::hour(sdate))) +

geom_density(fill = 'gray', color = NA) +

xlab('Hour of the Day') +

scale_x_continuous(breaks = 0:24)

Trips %>%

ggplot(aes(x = lubridate::minute(sdate))) +

geom_density(fill = 'gray', color = NA) +

xlab('Minute of the Hour') +

scale_x_continuous(breaks = 0:60)

Trips %>%

mutate(

HOD = lubridate::hour(sdate),

DOW = lubridate::wday(sdate),

) %>%

group_by(HOD, DOW, client) %>%

summarize(total = n()) %>%

head()

`summarise()` has grouped output by 'HOD', 'DOW'. You can override using the `.groups` argument.

| HOD | DOW | client | total |

|---|---|---|---|

| <int> | <dbl> | <chr> | <int> |

| 0 | 1 | Casual | 1 |

| 0 | 1 | Registered | 30 |

| 0 | 2 | Registered | 5 |

| 0 | 3 | Casual | 2 |

| 0 | 3 | Registered | 8 |

| 0 | 4 | Registered | 7 |

options(repr.plot.width=20)

Trips %>%

mutate(

HOD = lubridate::hour(sdate),

DOW = lubridate::wday(sdate),

) %>%

group_by(HOD, DOW, client) %>%

summarize(total = n()) %>%

ggplot(mapping = aes(x = HOD, y = total)) +

geom_line(mapping = aes(color = client)) + # the `group` aesthetic is unnecessary when using the `color` aesthetic

geom_point() +

facet_wrap(. ~ DOW, nrow = 1) +

xlab('H') +

scale_x_continuous(breaks = 0:23)

How far?#

Find the distance between each pair of stations. You know the position from the lat and long variables in Stations. This is enough information to find the distance. The calculation has been implemented in the haversine() function. haversine() is a transformation function. To use it, create a data table where a case is a pair of stations and there are variables for the latitude and longitude of the starting station and the ending station. To do this, join the Station data to itself. The statements Simple and Simple2 show how to create appropriately named variables for joining. Look the head() of Simple and Simple2 and make sure you understand how they are related to Stations. The joining of Simple and Simple2 should match every station to every other station. (Since a ride can start and end at the same station, it also makes sense to match each station to itself.) This sort of matching does not make use of any matching variables; everything is matched to everything else. This is called a full outer join. (A full outer join matches every case in the left table to each and every case in the right table.) Make sure you understand what the full outer join does before proceeding. For instance, you should be able to predict how many cases the output will have when the left input has n cases and the right has m cases.

[1] There are 347 cases in the Stations data table. How many cases will there be in a full outer join of Simple to Simple2?

\(120409 = 347 \times 347\)

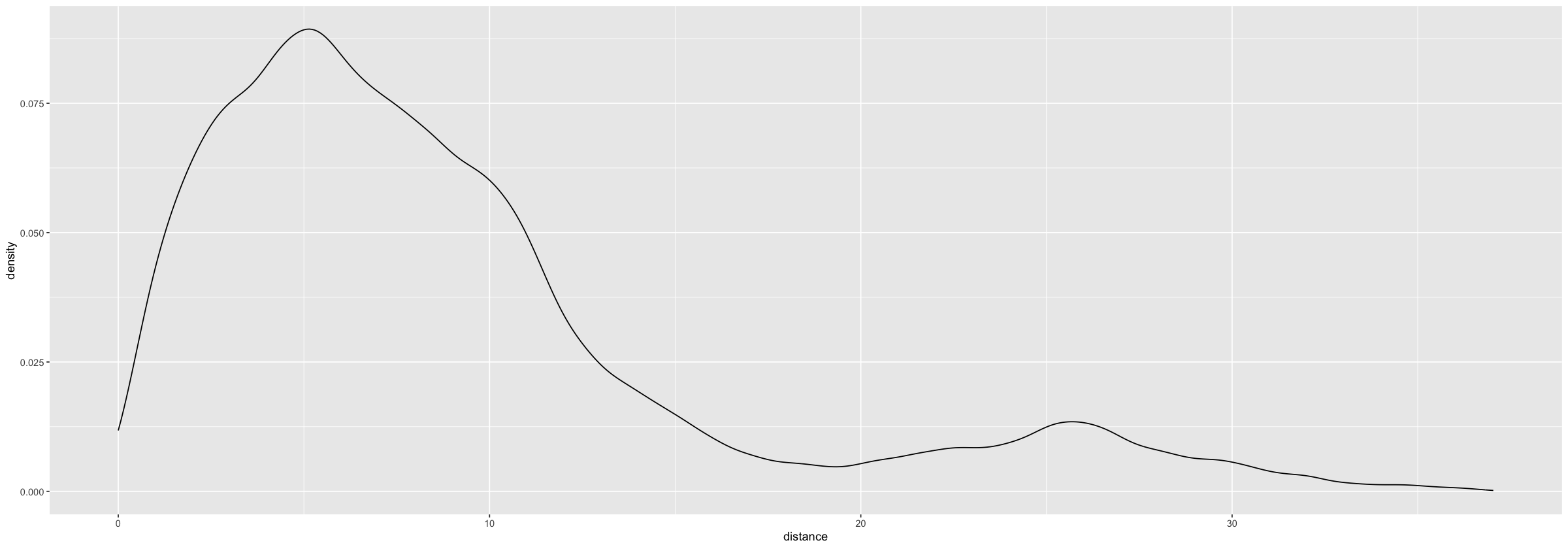

It’s often impractical to carry out a full outer join. For example, joining BabyNames to itself with a full outer join will generate a result with more than three trillion cases. (Three trillion cases from the BabyNames data is the equivalent of about 5 million hours of MP3 compressed music. A typical human lifespan is about 0.6 million hours.) Perform the full outer join and then use haversine() to compute the distance between each pair of stations. Check your result for sensibility. Make a histogram of the station-to-station distances and explain where it looks like what you would expect. (Hint: you could use the Internet to look up the distance from one end of Washington, D.C. to the other.) Once you have PairDistances, you can join it with Trips to calculate the start-to-end distance of each trip. (Of course, a rider may not go directly from one station to another.)

[2] Look at the variables in Stations and Trips and explain why Simple and Simple2 were given different variable names for the station.

Simple and Simple2 were given different variable names for the station because we want start station-end station pairs, which serves as the composite key on which we join the resulting table to the Trips table. If we don’t explicitly change the name of the name variable, then we won’t have a composite key on which to join the resulting table to the Trips table.

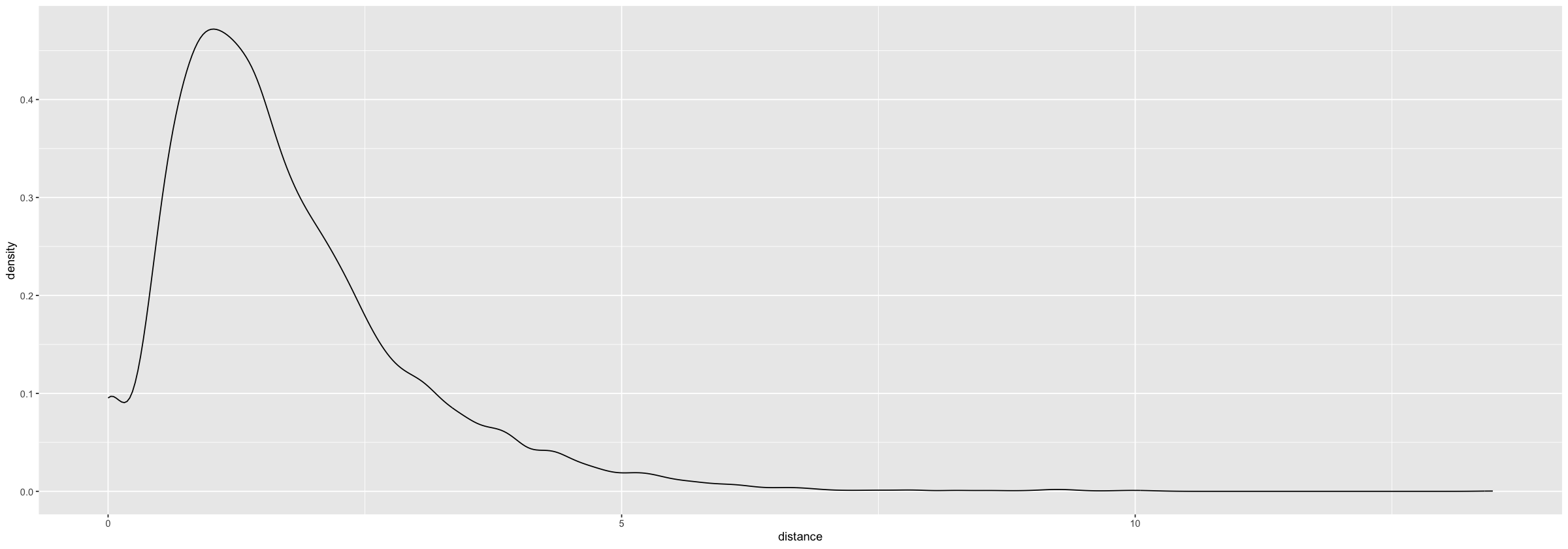

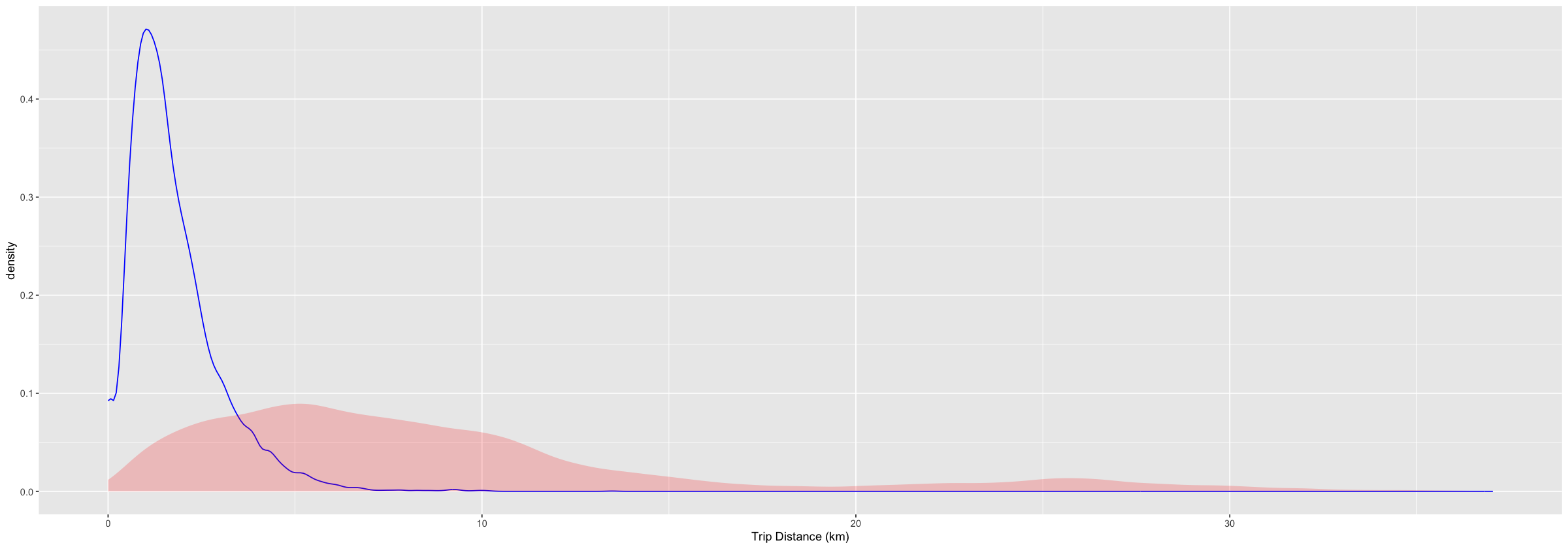

An inner_join() is appropriate for finding the distance of each ride. (Watch out! The Trips data and the PairDistances data are large enough that the join is expensive: it takes about a minute.) Display the distribution of the ride distances of the ride.

[3] Compare it to the distances between pairs of stations. Are they similar? Why or why not?

The visualization shows that the pairwise distance between stations has a larger spread than trip distance, which is skewed to the right (i.e., most trips are short, whereas a fair number of station-station distances are quite large: these routes are probably not used very often).

Simple <-

Stations %>%

select(name, lat, long) %>%

rename(sstation = name)

Simple2 <-

Simple %>%

rename(estation = sstation, lat2 = lat, long2 = long)

StationPairs <-

merge(Simple, Simple2, by = NULL)

PairDistances <-

StationPairs %>%

mutate(distance = haversine(lat, long, lat2, long2)) %>%

select(sstation, estation, distance)

Trips %>%

inner_join(PairDistances, by = c('sstation', 'estation')) %>%

ggplot(aes(x = distance)) +

geom_density()

Mapping the stations#

You can draw detailed maps with the leaflet package. You may need to install it. leaflet works much like ggplot() but provides special facilities for maps.

stationMap <-

leaflet(Stations) %>%

addTiles() %>%

addCircleMarkers(radius = 2, color = 'red')

#setView(-77.04, 38.9, zoom = 12)

stationMap

Assuming "long" and "lat" are longitude and latitude, respectively

Long-distance stations#

Around each station on the map, draw a circle whose radius reflects the median distance covered by rentals starting at that station. To draw the circles, use the same leaflet commands as before, but add in a line like this: addCircles(radius = ~ mid, color = "blue", opacity = 0.0001). For addCircles() to draw circles at the right scale, the units of the median distance should be presented in meters rather than kilometers. This will create too much overlap, unfortunately. So, set the radius to be half or one-third the median distance in meters. From your map, explain the pattern you see in the relationship between station location and median distance.