Polynomials#

Contents#

Univariate Polynomials#

REAL POLYNOMIAL FUNCTION IN ONE VARIABLE

A real polynomial function \(f\) in one variable is a map from the set \(\mathbb{R}\) to itself

\(f : \mathbb{R} \to \mathbb{R}\)

where the value \(f(x)\) of the function \(f\) at every real number \(x\) is given by a formula which is a real linear combination of nonnegative-integral powers of \(x\) (the same formula for all values of \(x\)).

A real polynomial in one variable is just a polynomial in one real variable. This is to say nothing of the nature of the coefficients.

POLYNOMIAL (EXPRESSION)

An expression which is a real linear combination of nonnegative-integral powers of \(x\) and which therefore defines a real polynomial function is called a polynomial (expression) with coefficients in \(\mathbb{R}\) (or with real coefficients). (The coefficients of a polynomial may be drawn from a rnage of mathematical structures. Polynomials with complex or rational coefficients, say, are defined similarly.)

\(a_0 x^0 + a_1 x^1 + \dotsb + a_i x^i + \dotsb\)

\(a_i x^i\) is called a term of the polynomial and \(a_i\) is the term’s coefficient.

It is required that a polynomial has finitely many nonzero terms (i.e., \(a_i = 0\) for all but a finite number of values of \(i\)). The power of \(x_i\) appears in the polynomial if \(a_i \ne 0\).

Notationally, we can use \(f(x)\) or just \(f\) to refer both to a polynomial expression or the function it defines. Thus we can write a polynomial expression or function in the following way.

\( \begin{aligned} f(x) = \sum_{i=0}^n a_i x^i = a_0 + a_1 x + \dotsb + a_n x^n \end {aligned} \)

If \(a_n \ne 0\) (i.e., if \(x^n\) is the highest power of \(x\) which appears in the polynomial) then \(a_n x^n\) is called the leading term and \(a_n\) is called the leading coefficient.

POLYNOMIAL EQUIVALENCE

\( \begin{aligned} p(x) &= \sum_{i=0}^{n} a_i x^i \\ q(x) &= \sum_{i=0}^{m} b_i x^i \\ \end {aligned} \)

then \(p = q\) iff \(a_i = b_i\) for all \(i \ge 0\).

Two polynomial expressions are equivalent if we can get from one to the other by rearranging terms (because real addition is commutative) and adding or deleting terms with \(0\) coefficient.

Example

\(1 \times x^3 + 2 \times x + (-1) = x^3 + 2x - 1 = x^3 + 0x^2 + 2x - 1 = -1 + 2x + x^3 + 0x^5\)

POLYNOMIAL DEGREE

For a given polynomial \(f\) with leading term \(a_n x^n\) the degree of \(f\) is \(n\) and we write

\(\deg f = n\)

Example

\(\deg (x^3 + 2x - 1) = 3\)

CONSTANT POLYNOMIAL

A constant polynomial is a polynomial of degree \(0\) with the form

\( \begin{aligned} f(x) = a \\ \end {aligned} \)

where \(a \in \mathbb{R}\).

The function defined by a constant polynomial is a constant function (i.e., its value does not depend on \(x\)).

The zero polynomial is also a constant polynomial.

ZERO POLYNOMIAL

The zero polynomial \(\bm{\mathit{0}}\) is the constant polynomial that has no nonzero coefficients.

\( \begin{aligned} \bm{\mathit{0}}(x) &= \sum_{i=0}^{n} 0x^i = 0 + 0x + \dotsb + 0x^n \\ &= 0 \\ \end {aligned} \)

The degree of the zero polynomial is conventionally defined to be \(-1\) but some authors define it to be \(-\infty\) or leave it undefined.

\(\deg \bm{\mathit{0}} = -1\)

LINEAR POLYNOMIAL

A linear polynomial is a polynomial of degree \(1\) with the form

\( \begin{aligned} f(x) = ax + b \\ \end {aligned} \)

where \(a, b \in \mathbb{R}\) and \(a \ne 0\).

QUADRATIC POLYNOMIAL

A quadratic polynomial is a polynomial of degree \(2\) with the form

\( \begin{aligned} f(x) = ax^2 + bx + c \\ \end {aligned} \)

where \(a, b, c \in \mathbb{R}\) and \(a \ne 0\).

CUBIC POLYNOMIAL

A cubic polynomial is a polynomial of degree \(3\) with the form

\( \begin{aligned} f(x) = ax^3 + bx^2 + cx + d \\ \end {aligned} \)

where \(a, b, c, d \in \mathbb{R}\) and \(a \ne 0\).

QUARTIC POLYNOMIAL

A quartic polynomial is a polynomial of degree \(4\) with the form

\( \begin{aligned} f(x) = ax^4 + bx^3 + cx^2 + dx + e \\ \end {aligned} \)

where \(a, b, c, d, e \in \mathbb{R}\) and \(a \ne 0\).

QUINTIC POLYNOMIAL

A quintic polynomial is a polynomial of degree \(5\) with the form

\( \begin{aligned} f(x) = ax^5 + bx^4 + cx^3 + dx^2 + ex + f \\ \end {aligned} \)

where \(a, b, c, d, e, f \in \mathbb{R}\) and \(a \ne 0\).

Multivariate Polynomials#

UNIVARIATE POLYNOMIAL

A univariate polynomial is a finite sum of terms where every term is the product of a coefficient and a monomial; a monomial is a power of the same variable; and all coefficients are of the same type.

MULTIVARIATE POLYNOMIAL

A multivariate polynomial is a finite sum of terms where every term is the product of a coefficient and a monomial; a monomial is the product of powers of variables; and all coefficients are of the same type.

POLYNOMIAL IN TWO REAL VARIABLES WITH REAL COEFFICIENTS

\( \begin{aligned} f(x_1, x_2) = \sum_{i, j = 0}^{m,n} a_{mn} x^m y^n = a_{00} + a_{10} x + a_{01} y + a_{11} xy + \dotsb + a_{mn} x^m y^n \end {aligned} \)

where \(a_{ij} \in \mathbb{R}\) is a constant and \(m\) and \(n\) are nonnegative integers.

If the leading coefficient \(a_{nm} \ne 0\), then the degree of the polynomial is \(n + m\).

LINEAR POLYNOMIAL IN TWO REAL VARIABLES WITH REAL COEFFICIENTS

A linear polynomial is a polynomial of degree \(1\) with the form

\( \begin{aligned} f(x, y) &= a_{10} x + a_{01} y + a_{00} \\ &= ax + by + c \\ \end {aligned} \)

where \(a, b \in \mathbb{R}\) and either \(a \ne 0\) or \(b \ne 0\).

QUADRATIC POLYNOMIAL IN TWO REAL VARIABLES WITH REAL COEFFICIENTS

A quadratic polynomial is a polynomial of degree \(2\) with the form

\( \begin{aligned} f(x, y) &= a_{20} x^2 + a_{11} x y + a_{02} y^2 + a_{10} x + a_{01} y + a_{00} \\ &= ax^2 + bxy + cy^2 + dx + ey + f \\ \end {aligned} \)

where \(a, b, c, d, e, f \in \mathbb{R}\) and either \(a \ne 0\), \(b \ne 0\), or \(c \ne 0\).

CUBIC POLYNOMIAL IN TWO REAL VARIABLES WITH REAL COEFFICIENTS

A cubic polynomial is a polynomial of degree \(3\) with the form

\( \begin{aligned} f(x, y) &= a_{30} x^3 + a_{21} x^2 y + a_{12} x y^2 + a_{03} y^3 + a_{20} x^2 + a_{11} x y + a_{02} y^2 + a_{10} x + a_{01} y + a_{00} \\ &= A x^3 + B x^2 y + C x y^2 + D y^3 + E x^2 + F x y + G y^2 + H x + I y + J \\ \end {aligned} \)

where \(a, b, c, d, e, f, g, h, i, j \in \mathbb{R}\) and either \(a \ne 0\), \(b \ne 0\), \(c \ne 0\), or \(d \ne 0\).

POLYNOMIAL IN SEVERAL REAL VARIABLES WITH REAL COEFFICIENTS

A polynomial \(f\) in \(k\) real variables

\(f : \mathbb{R}^k \to \mathbb{R}\)

and has the form

\( \begin{aligned} f(x_1, x_2, \dotsc, x_k) = \sum_{i_1, i_2, \dotsc, i_k = 0}^{n_1, n_2, \dotsc, n_k} a_{n_1 n_2 \dots n_k} x_1^{n_1} x_2^{n_2} \dots x_n^{n_k} \end {aligned} \)

\(P(x_1, x_2, ..., x_k) = \sum a_{n_1 n_2 ... n_k} x_1^{n_1} x_2^{n_2} ... x_k^{n_k}\)

where \(a_{i_1 i_2 \dots i_k} \in \mathbb{R}\) is a constant and \(n_1, n_2, \dotsc, n_k\) are nonnegative integers.

If the leading coefficient \(a_{n_1 n_2 \dots n_k} \ne 0\), then the degree of the polynomial is \(\sum n = n_1 + n_2 + \dotsb + n_k\).

POLYNOMIAL EVALUATION

\(\mathbb{R}[x]\) is the set of all (univariate) polynomials in the (real) variable \(x\) with real coefficients. The \(x\) which occurs in the expression of a polynomial should be thought of as a variable which may be substituted by an arbitrary (real) number \(\alpha\). This is called evaluating the polynomial at \(x = \alpha\). We write \(f(\alpha)\) for the (real) number which is obtained by replacing every occurrence of \(x\) in the expression of \(f(x)\) by \(\alpha\).

Example

\( \begin{aligned} f(x) &= -5x^7 - 6x^4 + 2 \\ \alpha &= -2 \\ f(\alpha) &= -5(-2)^7 - 6(-2)^4 + 2 = 546 \\ \end {aligned} \)

Where does the graph of a polynomial function intersect the \(x\)-axis?

ROOT

The real number \(\alpha\) is a zero, or root, of the polynomial \(f\) if \(f(\alpha) = 0\) (that is, if the graph of \(y = f(x)\) crosses the \(x\)-axis where \(x = \alpha\)).

Not every polynomial has a (real) zero. For example, if \(x\) is a real number then \(x^2\) is never negative and is only zero if \(x\) is zero, so \(x^2 + 1\) is never zero. Thus the polynomial \(x^2 + 1\) has no real roots. In order to find a zero of this equation we need to use complex numbers. In fact, every non-constant real polynomial has a zero, which may be a complex number. Further, if the real polynomial \(f\) has a complex zero \(\alpha\) then the complex conjugate of \(\alpha\) is also a zero.

A constant polynomial cannot have a zero unless it is actually the zero polynomial, in which case every number is a zero. So when we make general statements about zeros of polynomials we sometimes have to insert a clause excluding constant polynomials.

Example

For example, a linear polynomial function has as its graph a straight line. The slope and any point of intersection of the line with the \(x\)-axis and \(y\)-axis are easily determined from the coefficients \(a, b\) appearing in the formula \(f(x) = ax + b\) defining the values of \(f(x)\).

For a quadratic polynomial equation the corresponding graph may cross or touch the \(x\)-axis in two, one, or no points depending on the values of the constants \(a, b, c\) in the formula \(f(x) = ax^2 + bx + c\) defining the function. The formula for determining the zeros of \(f\) in terms of the coefficients \(a, b, c\) is

\( \begin{aligned} x = \frac{-b \pm \sqrt{b^2 - 4ac}}{2a} \end {aligned} \)

\(f\) will have a repeated zero when \(b^2 = 4ac\); no real zero when \(b^2 - 4ac\) is negative; and two distinct real zeros when \(b^2 - 4ac\) is positive.

There are similar but more complicated formulae which give the zeros of polynomials of degree \(3\) and \(4\). The search for a formula for the zeros of a polynomial of degree \(5\) lays at the origins of group theory.

Abel proved that there is no formula of the general sort (i.e., involving arithmetic operations and taking roots) which gives the zeros of a general quintic polynomial. Nevertheless, for some types of quintics, and other higher-degree polynomials, there is a formula.

Galois gave the exact conditions on a polynomial for there to be a formula for its zeros. He did this by associating a group to each polynomial and then interpreted the existence of such a formula for the zeros of the polynomial in terms of the structure of this group.

There are some remarkable similarities between the set \(\mathbb{Z}\) of integers with its operations of addition and multiplication and the set \(\mathbb{R}[x]\) of polynomials with its algebraic operations.

The set of polynomials in one real variable with real coefficients#

\(\boxed{\mathbb{R}[x]}\)

Polynomial addition#

We obtain \(f + g\) by adding together corresponding powers of \(x\) in \(f\) and \(g\).

POLYNOMIAL ADDITION AND SUBTRACTION

Given \(f, g \in \mathbb{R}[x]\), say

\( \begin{aligned} f(x) &= a_0 + a_1 x + \dotsb + a_n x^n + \dotsb \\ g(x) &= a_0 + b_1 x + \dotsb + b_n x^n + \dotsb \\ \end {aligned} \)

Then their sum is

\( \begin{aligned} f(x) + g(x) = (a_0 + b_0) + (a_1 + b_1) x + \dotsb + (a_n + b_n) x^n + \dotsb \\ \end {aligned} \)

Of course all but a finite number of the coefficients \((a_i + b_i)\) will be zero, since this is so for \(f(x)\) and \(g(x)\).

If \(f\) has degree \(n\) so

\(f(x) = \sum_{i = 0}^n a_i x^i = a_0 + a_1 x + \dotsb + a_n x^n\)

with \(a_n \ne 0\) and \(g\) has degree \(m\) so

\(g(x) = \sum_{i = 0}^m a_i x^i = b_0 + b_1 x + \dotsb + b_m x^m\)

with \(b_m \ne 0\) then

\( \begin{aligned} (f + g)(x) &= \sum_{i=0}^k (a_i + b_i) x^i = (a_0 + b_0) + (a_1 + b_1) x + \dotsb + (a_k + b_k) x^k \\ &= \sum_{i=0}^k c_i x^i \text{ where } c_i = a_i + b_i \\ \end {aligned} \)

where \(k\) is the larger of \(n\) and \(m\).

If \(n \gt m\) so \(k = n\) then all the coefficients \(b_{m + 1} \dotsb b_n\) of \(g\) beyond \(b_m\) are \(0\). But it’s useful to have them there so that we can write down a formula for the sum \(f + g\) in a uniform way. We will see something similar when we define multiplication of polynomials.

The degree of \(f + g\) is less than or equal to \(k\), the larger of \(n\) and \(m\). It’s possible for the degree of \(f + g\) to be strictly smaller than this (for example, if \(f = x^2 + x\) and \(g = -x^2 - 1\)) because the leading terms might cancel. This can only happen if \(\deg f = \deg g\).

Subtraction is defined as the addition of \(f\) with the additive inverse \(-g\) of \(g\).

Example

\( \begin{aligned} f(x) &= 2x^2 - 5x + 3 \\ g(x) &= 5x - 2 \\ (f + g)(x) &= 2x^2 + 1 = (2 - 0)x^2 + (-5 + 5)x + (3 - 2) \\ \end {aligned} \)

POLYNOMIAL INVERSE

Let

\( \begin{aligned} f(x) &= \sum_{i = 0}^n a_i x^i \\ \end {aligned} \)

with \(a_n \ne 0\) be a polynomial. Then its additive inverse is

\( \begin{aligned} -f(x) = (-1) \sum_{i = 0}^n a_i x^i = -(a_0 + a_1 x + \dotsb + a_n x^n) = -a_0 - a_1 x - \dotsb - a_n x^n = \sum_{i = 0}^n (-a_i) x^i \\ \end {aligned} \)

It is evident that the sum of any polynomial \(f\) and its additive inverse \(-f\) results in the zero polynomial.

\( \begin{aligned} f + (-f) = \sum_{i = 0}^n a_i x^i + \sum_{i = 0}^n (-a_i) x^i = \sum_{i = 0}^n 0 x^i = 0 \end {aligned} \)

The set of univariate polynomials in one real variable with real coefficients with polynomial addition is a \(2\)-tuple \((\mathbb{R}[x], +)\). This structure is an Abelian group under polynomial addition.

Let \(f, g, h \in \mathbb{R}[x]\).

Closed

\(f + g \in \mathbb{R}[x]\)

Associative

\((f + g) + h = f + (g + h)\)

follows from the deinition of polynomial addition and from the fact that addition in \(\mathbb{R}\) is both commutative and associative.

Commutative

\(f + g = g + f\)

follows from the deinition of polynomial addition and from the fact that addition in \(\mathbb{R}\) is both commutative and associative.

Identity Element

\(f + \mathit{0} = f\) where \(\mathit{0}\) is the zero polynomial \(0 + 0x + \dotsb + 0x^n\)

Inverse Elements

\(f + (-f) = \mathit{0}\)

Polynomial multiplication#

This section is being constructed alongside the page on summations; in particular, the section on discrete convolutions. The derivations on that page may be more accurate.

To obtain the formula for \(fg\) take the formulae for \(f\) and \(g\) and multiply them together gathering together all the coefficients of the same power of \(x\).

POLYNOMIAL MULTIPLICATION

Given \(f, g \in \mathbb{R}[x]\), say

\( \begin{aligned} f(x) &= \sum_{i=0}^{n} a_i x^i = a_0 + a_1 x + \dotsb + a_n x^n \\ g(x) &= \sum_{j=0}^{m} b_j x^j = b_0 + b_1 x + \dotsb + b_m x^m \\ \end {aligned} \)

be polynomials. Then their product is

\( \begin{aligned} (fg)(x) := f(x)g(x) = \left( \sum_{i=0}^{n} a_i x^i \right) \left( \sum_{j=0}^{m} b_j x^j \right) = \sum_{i=0}^{n} \sum_{j=0}^{m} a_i b_j x^i x^j \end {aligned} \)

If we wish to write this in standard form (i.e., as a sum of single powers of \(x\)) then we need to consider powers of degree \(i + j = k\) (which implies \(j = k - i\)).

\( \begin{aligned} \sum_{i=0}^{n} \sum_{j=0}^{m} a_i b_j x^i x^j = \sum_{i=0}^{n} \sum_{j=0}^{m} a_i b_{k-i} x^k \end {aligned} \)

\(k\) ranges from \(0\) to \(m + n\) but our sums are coupled since \(i\) depends on \(k\). This dependence is exactly that \(i\) ranges from \(0\) to \(k\). The easy way to see this is just by writing out a few cases, but logically it follows because \(a_0 x^0 b_k x^k\) results in a power of \(k\).

\( \begin{aligned} (fg)(x) &= \sum_{k=0}^{n+m} \underbrace{\left( \sum_{j=0}^{k} a_{k-j} b_j \right)}_{c_k} x^k \\ &= \sum_{k=0}^{n+m} \underbrace{\left( \sum_{i=0}^{k} a_i b_{k-i} \right)}_{c_k} x^k = c_0 + c_1 x + \dotsb + c_{n+m} x^{n+m} \\ \end {aligned} \)

The term inside the parentheses is the discrete convolution of the coefficients.

where

\( \begin{aligned} c_0 &= a_0 b_0 \\ c_1 &= a_0 b_1 + a_1 b_0 \\ c_2 &= a_0 b_2 + a_1 b_1 + a_2 b_0 \\ c_3 &= a_0 b_3 + a_1 b_2 + a_2 b_1 + a_3 b_0 \\ c_4 &= a_0 b_4 + a_1 b_3 + a_2 b_2 + a_3 b_1 + a_4 b_0 \\ c_5 &= a_0 b_5 + a_1 b_4 + a_2 b_3 + a_3 b_2 + a_4 b_1 + a_5 b_0 \\ \vdots \\ c_k &= \sum_{i=0}^k a_i b_{k-i} = a_0 b_k + a_1 b_{k - 1} + a_2 b_{k - 2} + \dotsb + a_{k - 1} b_1 + a_k b_0 \\ \vdots \\ \end {aligned} \)

As with polynomial addition any undefined constants are zero.

Provided \(f\) and \(g\) are nonzero the degree of \(fg\) is the sum of the degree of \(f\) and the degree of \(g\) because if \(\deg f = n\) so \(a_n \ne 0\) and \(\deg g = m\) so \(b_m \ne 0\) then every coefficient \(c_\ell\) with \(\ell \gt n + m\) is \(0\) and the coefficient \(c_{n + m}\) of \(x^{n + m}\) is \(a_n b_m\), which is nonzero.

(The Cauchy product generalizes polynomial multiplication to power series multiplication.)

Example

\( \begin{aligned} f(x) &= a_0 + a_1 x + a_2 x^2 + a_3 x^3 \\ g(x) &= b_0 + b_1 x + b_2 x^2 \\ (fg)(x) &= a_0 b_0 + a_0 b_1 x + a_0 b_2 x^2 + a_1 b_0 x + a_1 b_1 x^2 + a_1 b_2 x^3 + a_2 b_0 x^2 + a_2 b_1 x^3 + a_2 b_2 x^4 + a_3 b_0 x^3 + a_3 b_1 x^4 + a_3 b_2 x^5 \\ &= a_0 b_0 + (a_0 b_1 + a_1 b_0) x + (a_0 b_2 + a_1 b_1 + a_2 b_0) x^2 + (a_1 b_2 + a_2 b_1 + a_3 b_0) x^3 + (a_2 b_2 + a_3 b_1) x^4 + a_3 b_2 x^5 \end {aligned} \)

\( \begin{aligned} c_0 &= a_0 b_0 \\ c_1 &= a_0 b_1 + a_1 b_0 \\ c_2 &= a_0 b_2 + a_1 b_1 + a_2 b_0 \\ c_3 &= \phantom{a_0 b_3 + } a_1 b_2 + a_2 b_1 + a_3 b_0 \\ c_4 &= \phantom{a_0 b_4 + a_1 b_3 + } a_2 b_2 + a_3 b_1 \phantom{ + a_4 b_0} \\ c_5 &= \phantom{a_0 b_5 + a_1 b_4 + a_2 b_3 + } a_3 b_2 \phantom{ + a_4 b_1 + a_5 b_0} \\ \end {aligned} \)

Example

\( \begin{aligned} f(x) &= 2x^2 - 5x + 3 && = \phantom{-}3 x^0 - 5 x^1 + 2 x^2 \\ g(x) &= 5x - 2 && = -2 x^0 + 5x^1 + 0 x^2 \\ (fg)(x) &&&= -3.2 + 3.5x + 3.0x^2 + 5.2x - 5.5x^2 - 5.0x^3 - 2.2x^2 + 2.5x^3 + 2.0x^4 \\ &&&= -3.2 + (3.5 + 5.2)x + (3.0 - 5.5 - 2.2)x^2 + (-5.0 + 2.5)x^3 + 2.0x^4 \\ &&&= -6 + 25x - 29x^2 + 10x^3 \\ \end {aligned} \)

The set of univariate polynomials in one real variable with real coefficients with polynomial multiplication is a \(2\)-tuple \((\mathbb{R}[x], \times)\).

Let \(f, g, h \in \mathbb{R}[x]\).

Closed

\(fg \in \mathbb{R}[x]\)

Associative

\((fg)h = f(gh)\)

Commutative

\(f + g = g + f\)

Identity Element

\(f + \mathit{0} = f\) where \(\mathit{0}\) is the zero polynomial \(0 + 0x + \dotsb + 0x^n\)

Inverse Elements

\(f + (-f) = \mathit{0}\)

Proof of associativity

\( \begin{aligned} f(x) &= \sum_{i=0}^{m} a_i x^i \\ g(x) &= \sum_{i=0}^{n} b_i x^i \\ h(x) &= \sum_{i=0}^{p} c_i x^i \\ \end {aligned} \)

\( \begin{aligned} (fg)h &= \left[ \left( \sum_{i=0}^{m} a_i x^i \right) \left( \sum_{i=0}^{n} b_i x^i \right) \right] \left( \sum_{i=0}^{p} c_i x^i \right) \\ &= \left[ \sum_{i=0}^{m+n} \underbrace{\left( \sum_{j=0}^{i} a_j b_{i-j} \right)}_{c_i} x^i \right] \left( \sum_{i=0}^{p} c_i x^i \right) \\ &= \sum_{i=0}^{m+n+p} \left[ \sum_{j=0}^{i} \underbrace{\left( \sum_{k=0}^{j} a_k b_{j-k} \right)}_{\cdot_j} c_{i-j} \right] x^i \\ &= \sum_{i=0}^{m+n+p} \left( \sum_{j+k+\ell=i} a_j b_k c_\ell \right) x^i \\ &= \sum_{i=0}^{m+n+p} \left[ \sum_{j=0}^{i} a_j \underbrace{\left( \sum_{k=0}^{i-j} b_k c_{i-j-k} \right)}_{\cdot_{i-j}} \right] x^i \\ &= \left( \sum_{i=0}^{m} a_i x^i \right) \left[ \sum_{i=0}^{n+p} \left( \sum_{j=0}^{i} b_j c_{i-j} \right) x_i \right] \\ &= \left( \sum_{i=0}^{m} a_i x^i \right) \left[ \left( \sum_{i=0}^{n} b_i x^i \right) \left( \sum_{i=0}^{p} c_i x^i \right) \right] \\ &= f(gh) \\ \end {aligned} \)

POLYNOMIAL DIFFERENTIATION

Let

\( \begin{aligned} f(x) &= \sum_{k = 0}^n a_k x^k \\ \end {aligned} \)

be a polynomial. Then its derivative is

\( \begin{aligned} f'(x) = \sum_{k = 0}^n k a_k x^{k - 1} \\ \end {aligned} \)

Resources#

https://homepages.math.uic.edu/~jan/mcs320/polynomialsfactor.pdf

https://cseweb.ucsd.edu/~gill/CILASite/Resources/10Chap6.pdf

https://users.math.msu.edu/users/halljo/classes/codenotes/polyalg.pdf

https://www.math.columbia.edu/~khovanov/MA2_2022/files/02_polynomials.pdf

Figures#

[ w ]

1802-1829Abel, Niels[ w ]

1786-1837Horner, William[ w ]

1736-1813Lagrange, Joseph-Louis[ w ]

1813-1854Laurent, Pierre[ w ]

1668-1715Raphson, Joseph[ w ]

1765-1822Ruffini, Paolo[ w ]

1540-1603Viète, François

Terms#

[ w ] list of polynomial topics

[ w ] Abel-Ruffini Theorem

[ w ] Algebraic Expression

[ w ] Algebraic Solution

[ w ] Bezout’s Theorem

[ w ] Binomial

[ w ] Characteristic Polynomial

[ w ] Coefficient

[ w ] Constant Term

[ w ] Cyclotomic Polynomial

[ w ] Exponential Polynomial

[ w ] Factor Theorem

[ w ] Fundamental Theorem of Algebra

[ w ] Gauss’ Lemma

[ w ] Hensel’s Lemma

[ w ] Homogeneous Polynomial

[ w ] Horner’s Method

[ w ] Integer-Valued Polynomial

[ w ] Integral Domain

[ w ] Irreducible Polynomial

[ w ] Laurent Polynomial

[ w ] Like Terms

[ w ] Matrix Polynomial

[ w ] Monic Polynomial

[ w ] Monomial

[ w ] Multilinear Polynomial

[ w ] Newton’s Method

[ w ] Orthogonal Polynomial

[ w ] Polynomial

[ w ] Polynomial Degree

[ w ] Polynomial Equation

[ w ] Polynomial Evaluation

[ w ] Polynomial Factorization

[ w ] Polynomial GCD

[ w ] Polynomial Long Division

[ w ] Polynomial Remainder Theorem

[ w ] Polynomial Ring

[ w ] Polynomial Root, geometric properties

[ w ] Polynomial System

[ w ] Positive Polynomial

[ w ] Primitive Polynomial

[ w ] Rational Root Theorem

[ w ] Root

[ w ] Root-Finding Algorithm

[ w ] Ruffini’s Rule

[ w ] Synthetic Division

[ w ] Theory of Equations

[ w ] Trigonometric Polynomial

[ w ] Trinomial

[ w ] Vieta’s Formulas

General polynomial in one variable

A function \(P : \mathbf{F} \rightarrow \mathbf{F}\) is called a polynomial with coefficients in a field \(\mathbf{F}\) if there exist \(a_0, ..., a_n \in \mathbf{F}\) such that \(P(x) = a_n x^n + ... + a_2 x^2 + a_1 x + a_0\) for all \(x \in \mathbf{F}\).

Claim

Let \(a_0, ..., a_n \in \mathbf{F}\).

If \(a_n x^n + ... + a_2 x^2 + a_1 x + a_0 = 0\) for all \(x \in \mathbf{F}\), then \(a_0 = ... = a_n = 0\) (If a polynomial is the zero function, then all coefficients are 0.)

Proof by Contrapositive

If not all the coefficients are 0, then \(a_n x^n + ... + a_2 x^2 + a_1 x + a_0 \ne 0\).

Let

\(

\begin{align}

\frac{|a_0| + |a_1| + ... + |a_{n - 1}|}{|a_n|} + 1 &= x & \text{note that}\, x \ge 1 \,\text{and}\, x^j \le x^{n - 1} \,\text{for}\, j = 0, 1, ..., n - 1 \\

|a_0| + |a_1| + ... + |a_{n - 1}| &= |a_n|(x - 1) \\

|a_0| + |a_1| + ... + |a_{n - 1}| &\lt |a_n|x \\

(|a_0| + |a_1| + ... + |a_{n - 1}|)x^{n - 1} &\lt |a_n x^n| \\

|a_0 + a_1 x + ... + a_{n - 1} x^{n - 1}| \le (|a_0| + |a_1| + ... + |a_{n - 1}|) x^{n - 1} &\lt |a_n x^n| \\

|a_0 + a_1 x + ... + a_{n - 1} x^{n - 1}| &\lt |a_n x^n| \\

a_0 + a_1 x + ... + a_{n - 1} x^{n - 1} &\ne -a_n x^n \\

a_0 + a_1 x + ... + a_{n - 1} x^{n - 1} + a_n x^n &\ne 0 \\

\end{align}

\)

\(\blacksquare\)

import numpy as np

import matplotlib.pyplot as plt

plt.style.use('seaborn-pastel')

plt.rcParams['figure.figsize'] = (10, 6)

from itertools import zip_longest

---------------------------------------------------------------------------

FileNotFoundError Traceback (most recent call last)

File ~/anaconda3/envs/ml/lib/python3.11/site-packages/matplotlib/style/core.py:137, in use(style)

136 try:

--> 137 style = _rc_params_in_file(style)

138 except OSError as err:

File ~/anaconda3/envs/ml/lib/python3.11/site-packages/matplotlib/__init__.py:873, in _rc_params_in_file(fname, transform, fail_on_error)

872 rc_temp = {}

--> 873 with _open_file_or_url(fname) as fd:

874 try:

File ~/anaconda3/envs/ml/lib/python3.11/contextlib.py:137, in _GeneratorContextManager.__enter__(self)

136 try:

--> 137 return next(self.gen)

138 except StopIteration:

File ~/anaconda3/envs/ml/lib/python3.11/site-packages/matplotlib/__init__.py:850, in _open_file_or_url(fname)

849 fname = os.path.expanduser(fname)

--> 850 with open(fname, encoding='utf-8') as f:

851 yield f

FileNotFoundError: [Errno 2] No such file or directory: 'seaborn-pastel'

The above exception was the direct cause of the following exception:

OSError Traceback (most recent call last)

Cell In[1], line 3

1 import numpy as np

2 import matplotlib.pyplot as plt

----> 3 plt.style.use('seaborn-pastel')

4 plt.rcParams['figure.figsize'] = (10, 6)

6 from itertools import zip_longest

File ~/anaconda3/envs/ml/lib/python3.11/site-packages/matplotlib/style/core.py:139, in use(style)

137 style = _rc_params_in_file(style)

138 except OSError as err:

--> 139 raise OSError(

140 f"{style!r} is not a valid package style, path of style "

141 f"file, URL of style file, or library style name (library "

142 f"styles are listed in `style.available`)") from err

143 filtered = {}

144 for k in style: # don't trigger RcParams.__getitem__('backend')

OSError: 'seaborn-pastel' is not a valid package style, path of style file, URL of style file, or library style name (library styles are listed in `style.available`)

class Polynomial:

def __init__ (self, *coefficients):

self.coefficients = list(coefficients)

def __repr__ (self):

return f"Polynomial {str(tuple(self.coefficients))}"

def __str__ (self):

def x_expr (degree):

if degree == 0:

out = ''

elif degree == 1:

out = 'x'

else:

out = f'x^{str(degree)}'

return out

degree = len(self.coefficients) - 1

out = ''

for i in range(len(self.coefficients)):

coef = self.coefficients[i]

if abs(coef) == 1 and i < degree:

out += f"{'+' if coef > 0 else '-'}{x_expr(degree - i)}"

elif coef != 0:

out += f"{coef:+g}{x_expr(degree - i)}"

return out.lstrip('+')

def __call__ (self, x):

#return [0 * x + coef for coef in self.coefficients]

return sum([coef * x ** index for index, coef in enumerate(self.coefficients[::-1])])

def degree (self):

return len(self.coefficients)

def __add__ (self, other):

P1 = self.coefficients[::-1]

P2 = other.coefficients[::-1]

return self.__class__(*[sum(t) for t in zip_longest(P1, P2, fillvalue=0)][::-1])

def __sub__ (self, other):

P1 = self.coefficients[::-1]

P2 = other.coefficients[::-1]

return self.__class__(*[t1 - t2 for t1, t2 in zip_longest(P1, P2, fillvalue=0)][::-1])

def derivative (self):

derived_coefs = []

exponent = len(self.coefficients) - 1

for i in range(len(self.coefficients) - 1):

derived_coefs.append(self.coefficients[i] * exponent)

exponent -= 1

return self.__class__(*derived_coefs)

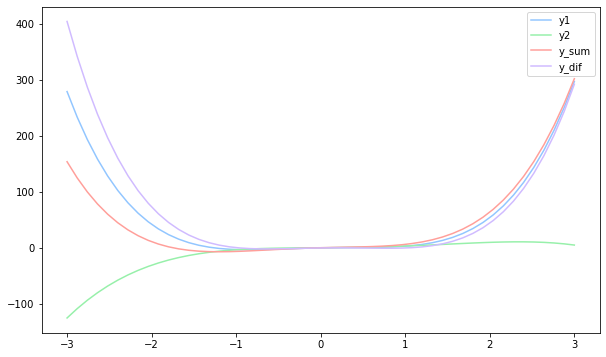

p1 = Polynomial(4, 0, -4, 3, 0)

p2 = Polynomial(-0.8, 2.3, 0.5, 1, 0.2)

p_sum = p1 + p2

p_dif = p1 - p2

x = np.linspace(-3, 3, 51)

y1 = p1(x)

y2 = p2(x)

y_sum = p_sum(x)

y_dif = p_dif(x)

plt.plot(x, y1, label='y1')

plt.plot(x, y2, label='y2')

plt.plot(x, y_sum, label='y_sum')

plt.plot(x, y_dif, label='y_dif')

plt.legend();

n = 5

display(

(n + 1)**2,

(n + 1)**2 - sum(range(n + 1)),

sum(range(n + 2)),

sum(range(n + 1)),

)

36

21

21

15

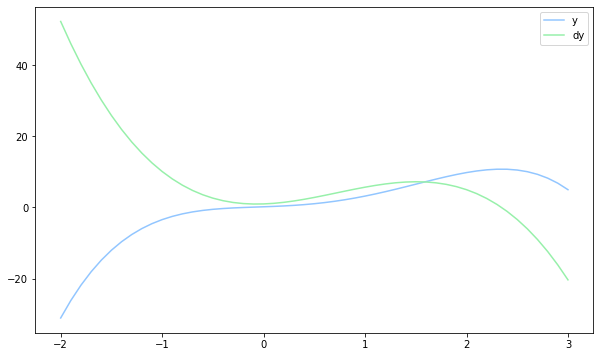

p = Polynomial(-0.8, 2.3, 0.5, 1, 0.2)

dp = p.derivative()

x = np.linspace(-2, 3, 51)

y = p(x)

df = dp(x)

plt.plot(x, y, label='y')

plt.plot(x, df, label='dy')

plt.legend();

print(p)

print(dp)

-0.8x^4+2.3x^3+0.5x^2+x+0.2

-3.2x^3+6.9x^2+x+1

for count, poly in enumerate([p]):

print(f'$p_{count} = {str(poly)}$')

$p_0 = -0.8x^4+2.3x^3+0.5x^2+x+0.2$